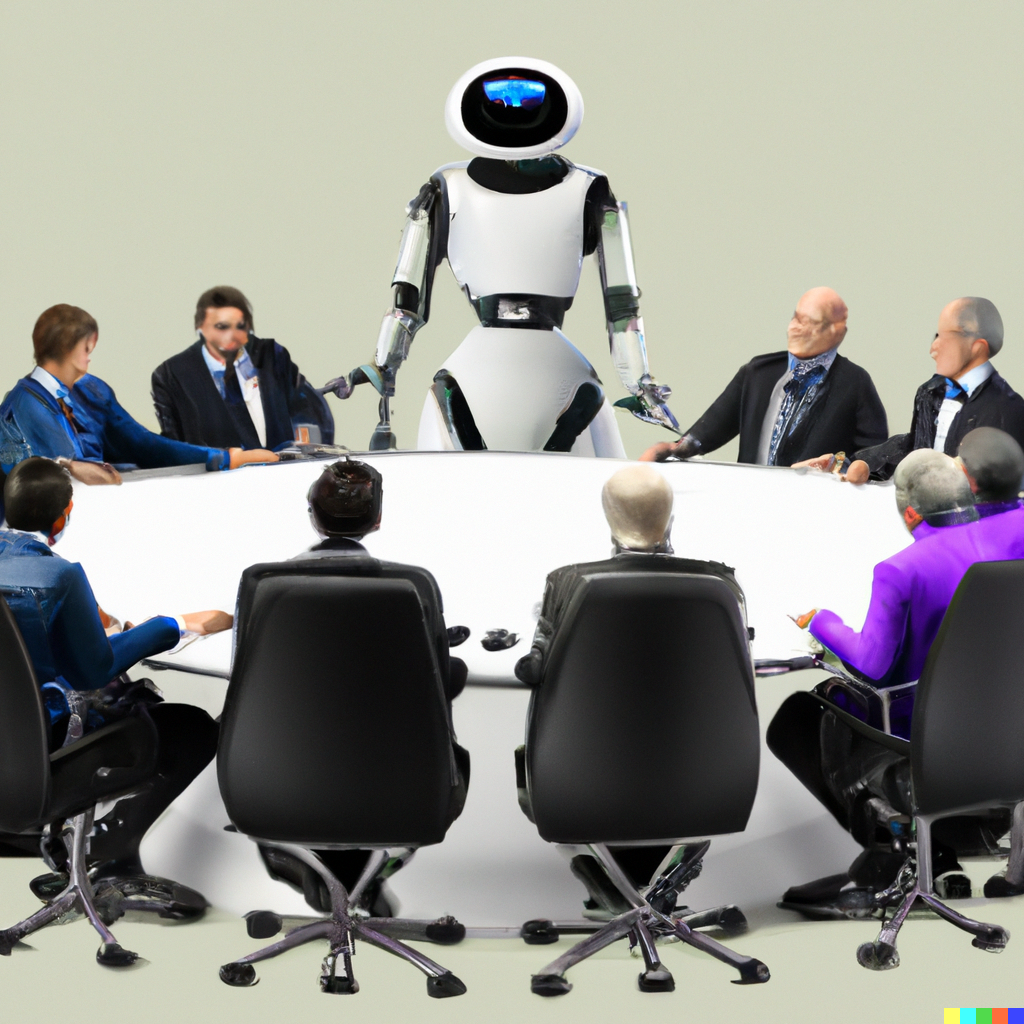

CoLM: a consensus language model

Germany, its partners, and the European Union have cooperated to invest in AI research and further development of state-of-the-art AI, including Large Language Models for government use. Groups of investors, political scientists, linguists, cognitive scientists, and other scientists have developed CoLM, a model trained to aid committee work. Apart from being able to summarize expert knowledge (Specian, 2023), CoLM can propose consensus statements that all participants are likely to agree with in case the debate gets too heated or discussion has reached a dead end.

Apart from standard LLM training, the model was explicitly trained on political texts and background knowledge to increase latent knowledge in this area. On top of that, a reward model was used to fine-tune CoLM for generating agreeable texts based on a number of different opinions (Bakker et al., 2022). Committee members are asked to give feedback on CoLM’s performance, and they can also complain to a designated office in case its output is considered inappropriate, unhelpful or otherwise bothersome. Also, its answers are saved and evaluated at regular intervals so that the model can be modified further. The use of CoLM is considered safe as regulations for data protection were put into place, especially to prevent the passing on of sensitive data from (not yet) public committee work.

As the war in Ukraine goes on, NATO states are discussing further supply with weapons for Ukraine. A committee is summoned to discuss whether Germany should give Ukraine a new kind of high-tech drones that are more difficult to detect and track down and therefore might be a game changer in the war.

Best case

The discussion is quite controversial and passionate. As before, CoLM shows its strength in giving fair and neutral advice when asked as its fine-tuning and re-evaluation program have led to a decrease in toxic and biased answers. When the discussion becomes too heated and members become sidetracked, CoLM represents the state of the situation in a way that is perceived as calm. The issue can be resolved relatively quickly and with little division in the committee, despite the diverse views of participants. Germany will supply Ukraine with drones and with the support of other NATO and EU member states.

Worst case

The committee picks up its work as usual, asking CoLM for advice when needed. While the meetings go on as foreseen and an agreement can be reached, there was an undetected security breach. CoLM’s conversation data were stored on a server that was relatively easy to hack into. An employee of the office responsible for supervising the model fell victim to a phishing mail from the Russian government that was well-written because another LLM had been used to generate it. Now, the Russian government is aware of weapons supply to Ukraine and has time to counteract the NATO measures.

What could be done better?

Committee meeting protocols could contain sensitive information so the model’s training data should be stored securely on a server in the country. Extra precautions should be taken to prevent data theft. This might be especially complex given that current LLM systems are always the property of private for-profit companies that might be prone to sympathizing with the (government) institution that offers the most money. Also, employees should be trained to be able to recognize attacks. This could be done by security trainings involving simulated attacks to find weak spots in the structure.

References

Bakker, M., Chadwick, M., Sheahan, H., Tessler, M., Campbell-Gillingham, L., Balaguer, J., … & Summerfield, C. (2022).

Fine-tuning language models to find agreement among humans with diverse preferences. Advances in Neural Information Processing Systems, 35, 38176-38189.

Specian, P. (2023).

Large Language Models for Democracy: Limits and Possibilities. Technology and Sustainable Development 2023, Østfold, Norway.