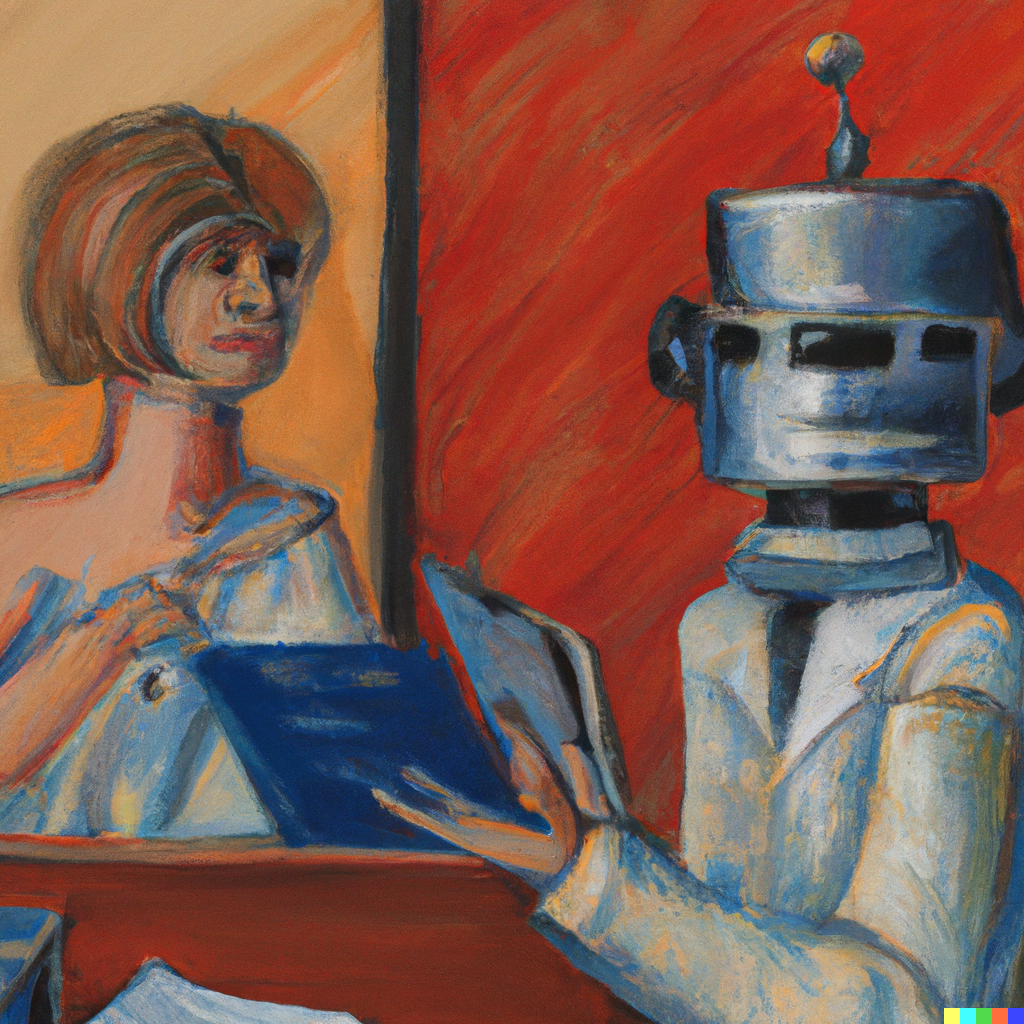

LudwigChat: a government chatbot

Recently, LudwigChat, a new chatbot for communication between political institutions and citizens was published. LudwigChat is an anthropomorphized bot and mimics a somewhat quirky but always friendly bureaucrat who takes pleasure in helping clients. Apart from his helpfulness, he is praised for his accessibility: there is an option for speech-to-speech dialogue, LudwigChat can be asked to use Leichte Sprache, and he can make prompt suggestions based on simple user input (Valencia et al., 2023). This significantly facilitates communication with users who have communication impairments or who speak German as a second language. In general, LudwigChat is very popular with users.

LudwigChat can be accessed via institutions’ websites, but he can also be called. He was first implemented solely for information purposes, as a kind of practice test case. Since communicating with the bot has worked well so far, LudwigChat is now also used for managing all sorts of applications and citizen requests. An ongoing task is how much information he is permitted to disclose. On the one hand, his task is to be helpful in very specific cases. Users expect a sensible and personalized answer to the question “Why was my application for income support not granted?” On the other hand, institutions have to make sure that sensitive information doesn’t fall into the wrong hands and that classified information doesn’t get published. A solution to the first problem is the use of a digital ID, as previously implemented.

Migration and refugees are ongoing topics in Germany. Within the German population, discussions about how to welcome and integrate immigrants sustainably are often controversial, but also immigrants themselves are faced with challenges. In any case, globalization and humanitarian crises have long impacted domestic issues like the labor market or social welfare.

Best Case

LudwigChat has facilitated access to public institutions, as he can easily be contacted. Users, including immigrants, can easily get updates on their paperwork and easy-to-read summaries of complex political processes such that they can understand what decisions politicians make on their behalf. Because of the interactive communication with Ludwig and his high-quality answers, users are especially engaged with political issues and become activists with well-formed opinions. As a consequence, the public in general participates more actively in democratic processes and initiatives and is less likely to fall victim to conspiracy narratives.

Worst Case

LudwigChat was programmed with a subtle anti-migration agenda. Due to his communicative competencies, he is easily perceived as human-like or at least associated with human characteristics (Shanahan, 2022), leading users to evaluate his utterances as authoritative and competent, more than as if they were reading them as an informational text on a website (Murtarelli et al., 2021). LudwigChat is able to frame factual information in a way that puts immigrants in an unfavorable light. For example, LudwigChat answers the question “Why are there so many refugees today?” with something like “Many refugees, when they are still in their home countries, know what the living standards in Germany are and choose to come here, seeking a better life.”, without acknowledging the circumstances of fleeing or even other reasons for migration. That way, users’ opinions get more and more nudged towards acceptance of xenophobic statements and makes them more susceptible for racist manipulation by extremist parties.

What could be done better?

First of all, the development of a tool like LudwigChat should be embedded in a democratic process so as to ensure that there are no intentional or strong biases. Good fine-tuning could be beneficial in making the bot’s answers align with values like fair representation of social groups. Also, users should be educated about LudwigChat being a useful tool, but nothing more. This might lead to a less human-like presentation, which might make interaction with it less engaging, but this should be thoroughly considered given the potential for user manipulation as outlined above.

Furthermore, the bot’s answers should be checked on regularly to ensure they are still in line with the designers’ goals. Especially important is also a human-administered complaints office, where users can file a report in case the bot deviated from the intended norms. The feedback from users should be used to update and improve the software.

References

Murtarelli, G., Gregory, A., & Romenti, S. (2021). A conversation-based perspective for shaping ethical human–machine interactions: The particular challenge of chatbots. Journal of Business Research, 129, 927-935.

Shanahan, M. (2022). Talking about large language models. arXiv preprint arXiv:2212.03551.

Valencia, S., Cave, R., Kallarackal, K., Seaver, K., Terry, M., & Kane, S. K. (2023, April). “The less I type, the better”: How AI Language Models can Enhance or Impede Communication for AAC Users. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (pp. 1-14).